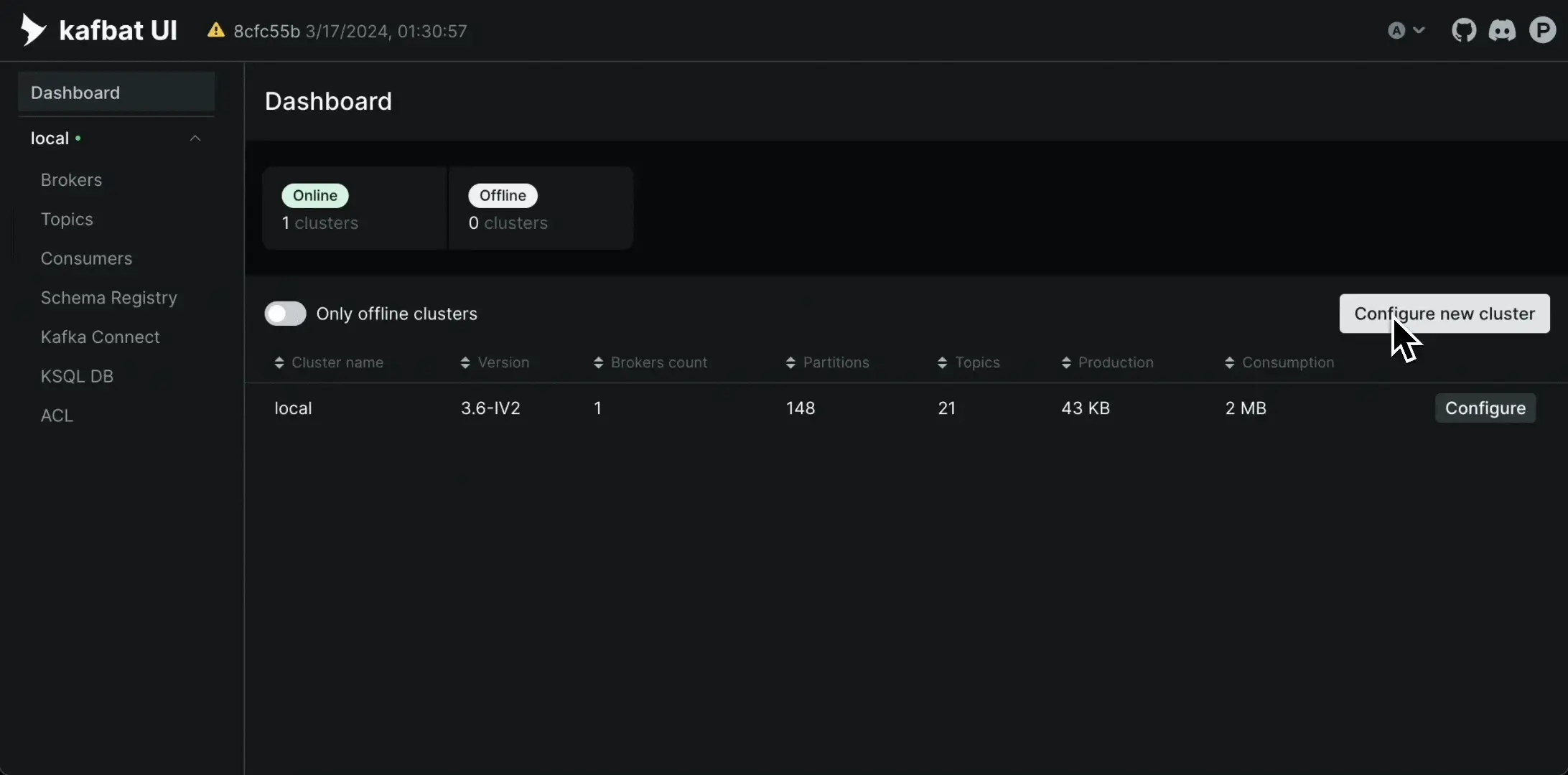

Versatile, fast and lightweight web UI for Kafka clusters

Kafbat UI makes your kafka data flows observable, simplifies troubleshooting, and helps optimize performance—all in a lightweight dashboard.

Versatile, fast and lightweight web UI for Kafka clusters

Kafbat UI makes your kafka data flows observable, simplifies troubleshooting, and helps optimize performance—all in a lightweight dashboard.

Trusted by teams that rely on kafka

Trusted by thousands, running in production at scale

10k+ github stars

Widely adopted—trusted by kafka teams around the world.

400 M+ Docker pulls

Proven at scale—running in production across countless deployments.

5-stars on ProductHunt

Loved by users—top-rated for simplicity, speed, and reliability.

Everything you need to navigate kafka

From messages to schemas to connectors — manage it all in one UI

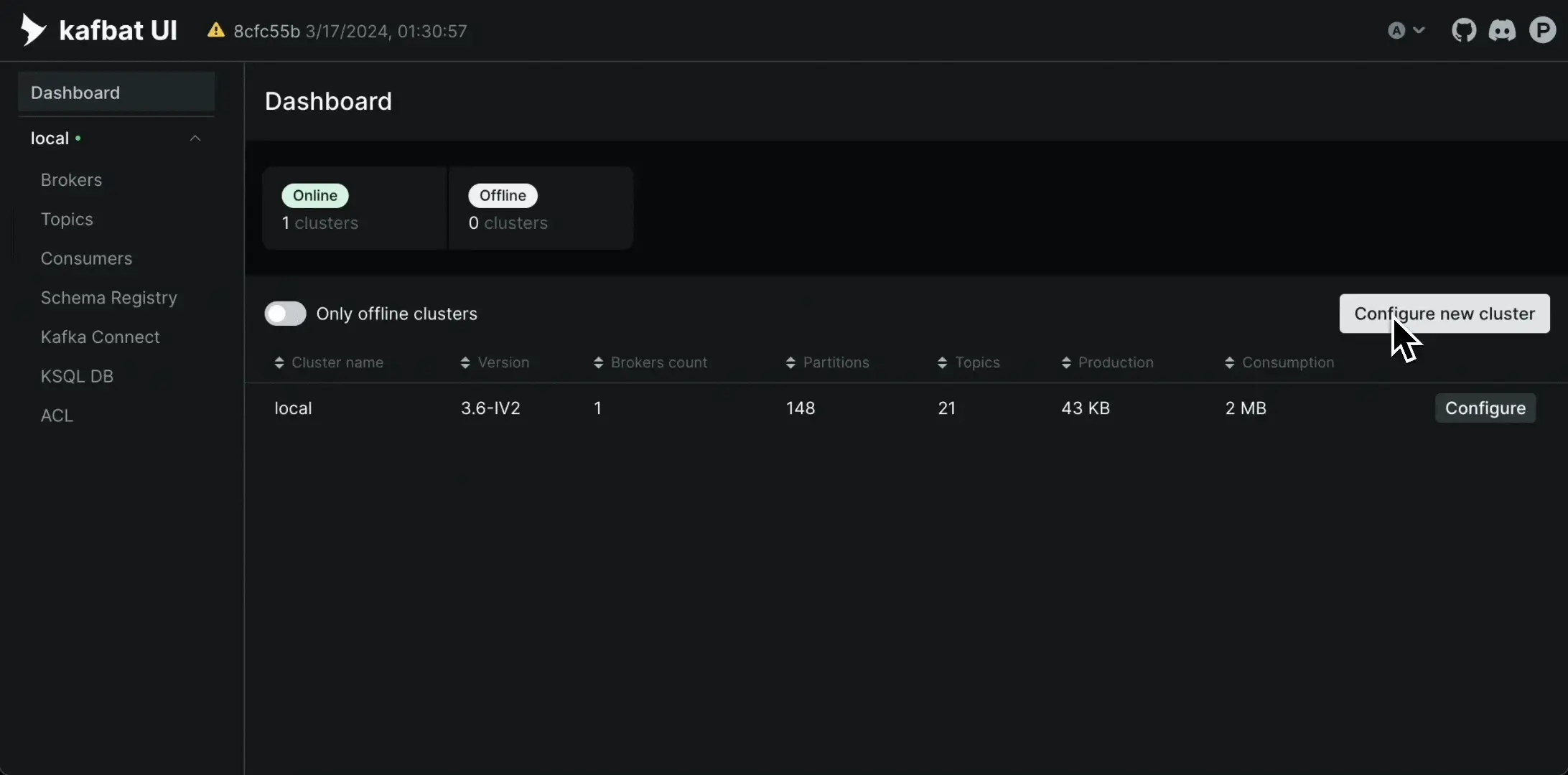

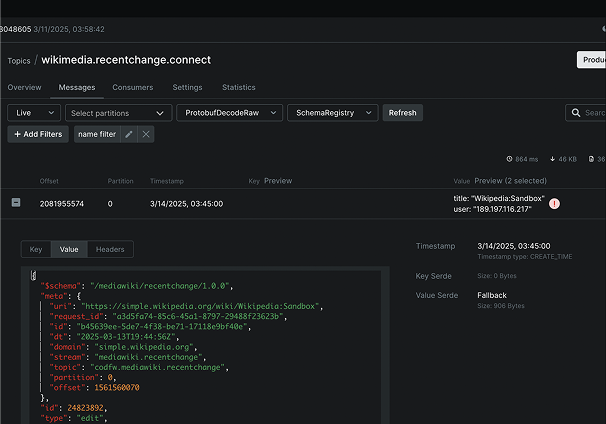

Messages

Find the right message without the hassle

Powerful topic-wide search with built-in CEL filtering helps your team pinpoint issues faster and navigate kafka data with ease.

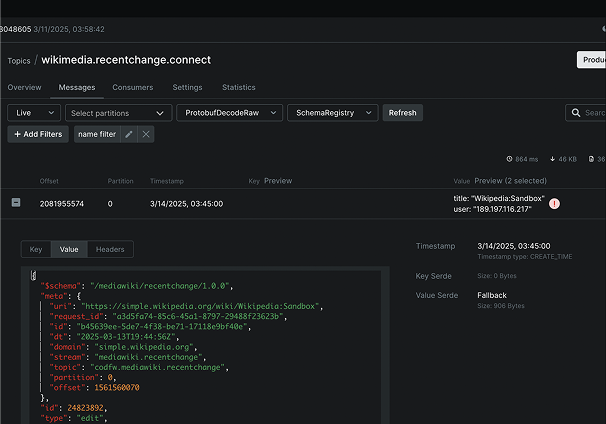

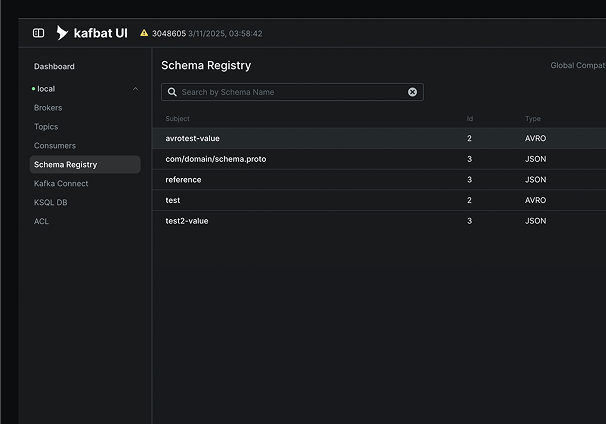

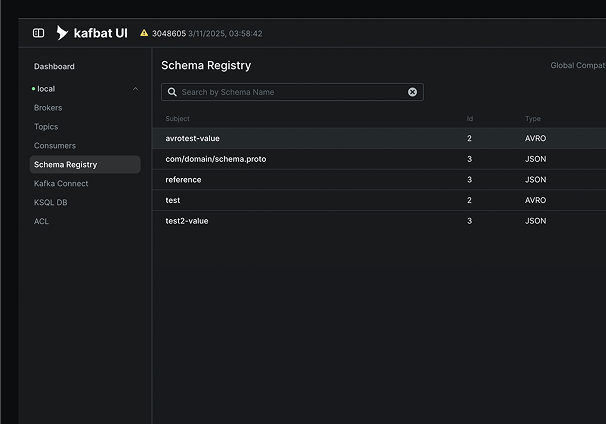

Schema registry

Schema management and beyond

Full support for Confluent Schema Registry—view, edit, and manage schemas effortlessly. Built-in SerDe support for Glue and SMILE, with APIs to extend and integrate your own pluggable SerDes.

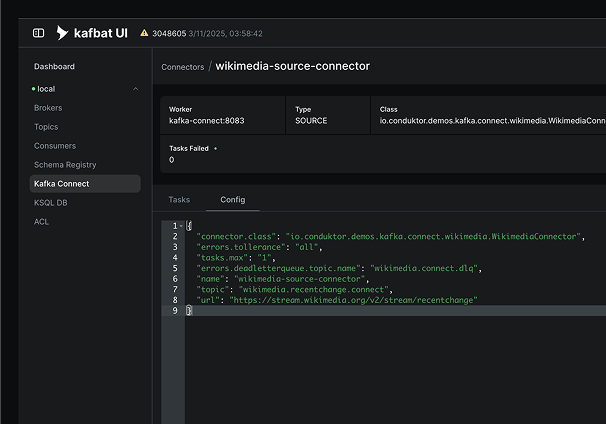

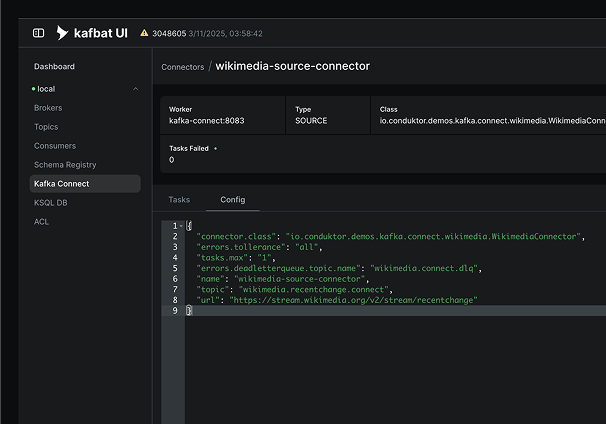

Kafka Connect

Take control of your Kafka Connect clusters

Get a full overview, view and edit connector configs, restart connectors, and manage tasks—all in one place.

Messages

Find the right message without the hassle

Powerful topic-wide search with built-in CEL filtering helps your team pinpoint issues faster and navigate kafka data with ease.

Schema registry

Schema management and beyond

Full support for Confluent Schema Registry—view, edit, and manage schemas effortlessly. Built-in SerDe support for Glue and SMILE, with APIs to extend and integrate your own pluggable SerDes.

Kafka Connect

Take control of your Kafka Connect clusters

Get a full overview, view and edit connector configs, restart connectors, and manage tasks—all in one place.

Professional Services for Apache Kafka

Optimize, scale & secure your kafka workflows

Need expert guidance to streamline your Kafka infrastructure? Our professional services ensure smooth deployment, performance tuning, and security hardening for your Kafka ecosystem.

Kafka Architecture Review

Optimize your clusters for peak performance

Custom UI Implementation

Tailored UI features for your Kafka needs

Performance & Scaling Support

Keep up with high-throughput demands

Security & Compliance

Secure your Kafka workflows with best practices

24/7 Enterprise Support

Get expert help when you need it most

Get started

Deploy Kafbat UI with a single command and streamline your kafka operations instantly

© 2025 Kafbat. All rights reserved.

Apache, Apache Kafka, Kafka, and associated open source project names are trademarks of the Apache Software Foundation

© 2025 Kafbat. All rights reserved.

Apache, Apache Kafka, Kafka, and associated open source project names are trademarks of the Apache Software Foundation